This is the episode where they talk about Bill Gates' retirement and the contributions he made to the world and the industry. Their conclusion was that his biggest contribution was a strategy based on an understanding of Moore's Law. The thesis is that he understood that if you release something as soon as it will run (even if it runs badly), Moore's Law will bail you out shortly and that is more efficient than trying to make something that runs tight in the first place. I agree that this strategy was a huge part of the success of Microsoft and it was clearly both the correct strategy in hindsight and a forseeably correct strategy given Moore's Law.

But I think that strategy is failing now and I think that this is a huge part of the reason why Vista is tanking. It doesn't have much to do with why Vista is not of interest to me (that is more around DRM and a closed mentality), but I think it is a big part of the greater market failure.

Why is the strategy failing? I have to introduce a concept for a minute here and for the purposes of this article, let's call it "Klatte's Law". Klatte's Law states that at any moment in time, a user's computational needs can be represented as a gently sloping linear increase. This will stagger up as the user discovers whole new categories of computational need (i.e. if someone who just does word processing starts doing video editing, there will be an enormous jump, but both before and after the jump it is a gentle slope).

Klatte's Law applies to mass market computing, but not to specialized niches like high performance computing.

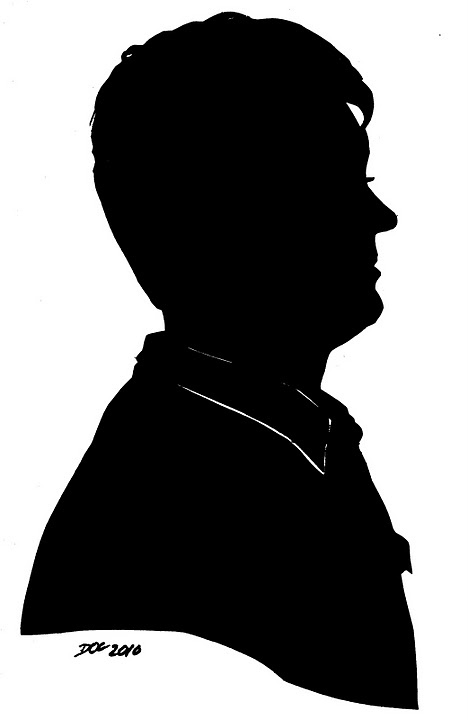

If you were to plot a zoomed in view of Moore's Law plotted with Klatte's Law, it might look something like this:

The strategy of ignoring tight code in favor of the depending upon Moore's Law works as long as you are to the left of the intersection of Moore's Law and Klatte's Law, but it starts to break down as you approach the intersection and fails completely to the right of the intersection.

Fundamentally, I think that the market as a whole is currently to the right of this intersection. This could change at some point if a killer app comes out that requires tons of computational power (but this killer app will not be an operating system). You can depend on Moore's Law to bail you out if you think you have that killer app, or if you are so far to the left of the intersection that the user already wants to upgrade their system.

But if you do not have a killer app and if the user is satisfied with their current system, you are toast.

"What do you mean I need a quad core machine with twice as much ram to run Vista well? My core2 duo machine does everything I need just fine!"

Microsoft understands Moore's Law, but they don't understand Klatte's Law at all.

David

2 comments:

I had never thought to make my own law out of this, but I certainly agree with the concept. We have reached a point where the CPU is no longer the bottleneck in helping us complete the tasks that we want our PC's to perform. My biggest problem is that my network performance is not keeping up my "needs". Is there a law for that? If we eliminated the bandwidth problem I might start bumping into CPU limits again, but not in the near term.

Network bandwidth is definitely a big bottleneck. Klatte's Law applies, but I'm not sure Moore's Law does.

I think memory is a big bottleneck too, despite recent advances.

We will have "enough" ram when our systems no longer have "virtual memory" to aid with RAM shortfalls, but instead keep everything in RAM and have excess for unfettered buffering. And of course operating systems and languages that take advantage of it.

Think of the problems that would suddenly become computationally feasible if you had a terabyte of usable RAM (pairwise comparisons of millions/billions of fingerprints for multidimensional clustering and the like).

Post a Comment